Research Update by PRO and ICCS: Intricacies of human-robot collaboration (HRC) within industrial settings

This blog entry explores the intricacies of human-robot collaboration (HRC) within industrial settings, with a focus on achieving seamless interaction through a skill-based robot task execution engine.

The FELICE proposed framework intricately orchestrates the dynamic interplay between the perception and acting/reacting layers in the context of human-robot collaboration, with a specific emphasis on the role of Behavior Trees (BTs) in both task execution and deviation handling.

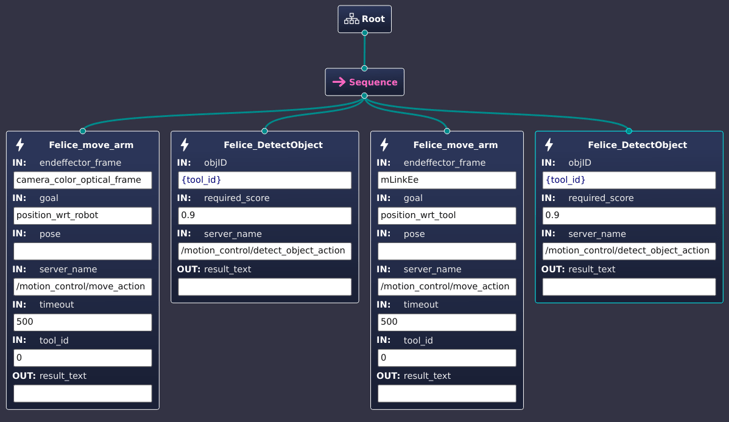

Behavior Trees (BTs) are increasingly favored for human-robot collaborative assembly tasks in industrial settings due to their hierarchical, modular structure [1]. BTs excel in managing complex assembly scenarios by breaking tasks into manageable sub-tasks, promoting fault tolerance, and ensuring real-time adaptability. Their organized architecture facilitates skill mapping and organization, enhancing efficiency in skill-based robot task execution. The use of sub-trees and fallback sub-trees in BT design promotes modularity. This structured approach allows task de-composition and modularity, catering to collaborative assembly intricacies and enabling real-time adaptability. Fig. 1 demonstrates a BT structure for a pickup skill.

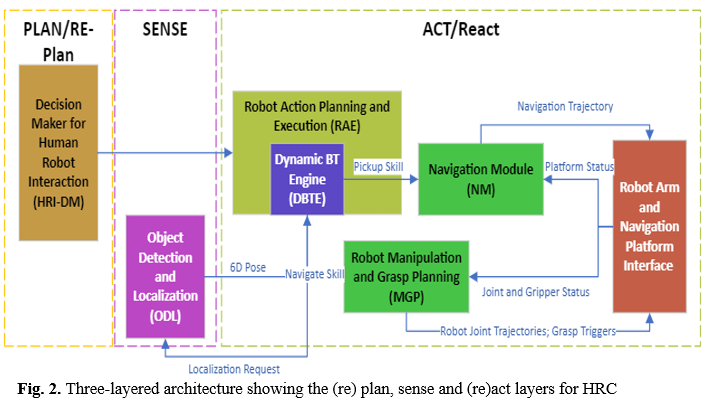

In FELICE the Behavior Tree-based Task Execution (BTE) is seamlessly integrated into the acting/reacting layer, collaborating synergistically with the perception layer, which includes robot proprioception and object localization modules as shown in Fig. 2.

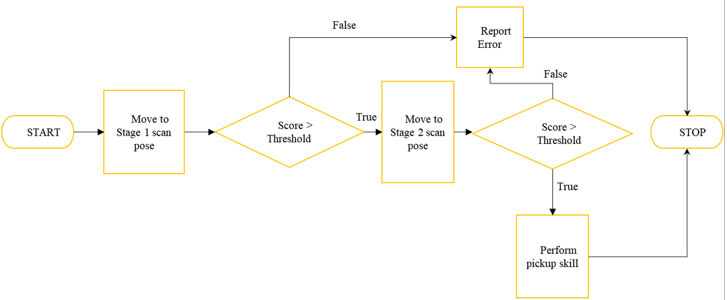

This integration facilitates the efficient detection and handling of deviations, particularly those arising from failures during robotic manipulation (grasping), ensuring real-time responsiveness to dynamic changes in collaborative assembly tasks. A dynamic change could be either a failure of robot localization or a poor object detection result. Failures, in terms of BTs, are implemented as a Dynamic Behavior Tree Execution Engine (DBTE) which loads a BT as a two-stage approach (Fig. 3).

n a real case scenario on the shopfloor, initially, the robot arm moves to a scan pose relative to itself, allowing scanning from the side of the tool/object. Subsequently, the arm moves to a scanning pose relative to the tool/object, enabling scanning from the top. At each iteration of moving to a scanning position and performing a scan, there is an interaction between the RAE and the ODL module.

[1] 11. M. Iovino, E. Scukins, J. Styrud, P. Oegren, and C. Smith, “Asurvey of behavior trees in robotics and ai,” Robotics and Autonomous Systems, 2022, Vol. 154, pp. 104096